Adding Google BigQuery Data Warehouses

A Google BigQuery data warehouse is an instance of Google BigQuery that contains the tables and views that you want to access as model facts and dimensions. It also contains aggregate table instances that either you or the AtScale engine creates in a BigQuery dataset that you specify.

Before you begin

-

You must have the

datawarehouses_adminordatawarehouses_managerealm role assigned in the Identity Broker. -

Ensure that you know the BigQuery dataset and ProjectID to use for building aggregate tables in the data warehouse. AtScale reads and writes aggregate data to this dataset. The AtScale service account user must have ALL privileges for this dataset.

-

Assign your Google BigQuery service account the following roles:

BigQuery Data EditorandBigQuery Job User.The service account used will be the one used for setting up system query roles. If aggregates are stored in a project different from the project where fact data resides, then this service account also needs

BigQuery Data ViewerandBigQuery Job Userfor all the projects that contain fact data. -

The Google BigQuery service account used for setting up large/small query roles needs to have read access on all the projects with fact data, as well as the project where aggregate dataset resides. At the minimum, it requires the

BigQuery Data ViewerandBigQuery Job Userroles. -

If you want to enable impersonation for the data warehouse, complete the steps described in Setting Up Impersonation for Google BigQuery.

Google BigQuery Views cannot be previewed in Design Center.

Authentication

You can authenticate with a JSON credential file. For more information on Google authentication types, see Google Cloud Authentication.

To enable authentication, you need to do a few things in advance.

When using the JSON credential file for your Google Cloud Platform service account:

-

Ensure that the file can be accessed by AtScale.

-

The file for the service accounts can be created from the Google Cloud Platform console from the Menu > IAM & admin > Service accounts. The file contains seven keys and their associated values. For example:

{ "name": "projects/my-project-123/serviceAccounts/my-sa-123@my-project-123.iam.gserviceaccount.com", "projectId": "my-project-123", "uniqueId": "113948692397867021414", "email": "my-sa-123@my-project-123.iam.gserviceaccount.com", "displayName": "my service account", "etag": "BwUp3rVlzes=", "oauth2ClientId": "117249000288840666939" }

The authentication that is based on Google Compute Node service account credentials can be used with the following setup:

- A user-managed service account is attached to the Google Compute Node that runs AtScale.

- The service account has access to project(s) or impersonates another service account that has access in Google BigQuery.

- If the service account has access to multiple Google BigQuery projects, then multiple AtScale data warehouses can be set up corresponding to the needed projects.

You can use the following commands to check which projects the compute node service account has access to:

gcloud config listgcloud projects list

Procedure

To add a new Google BigQuery data warehouse:

-

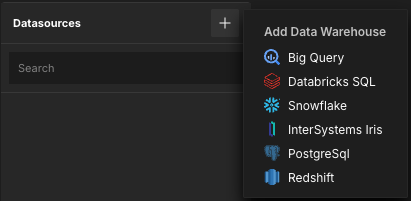

In Design Center, open the Datasources panel.

-

Click the + icon and select Big Query.

The Add Data Warehouse panel opens.

-

Enter a unique Name for the data warehouse.

-

Enter the External connection ID for the data warehouse. You can optionally enable the Override generated value? toggle to default this value to the name of the data warehouse.

-

In the Aggregate Project ID field, enter name of the BigQuery ProjectID in which the aggregate schema resides.

The service account used for setting up system query roles will be the one that creates aggregates. This account must have All privileges on the project.

-

In the Aggregate Schema field, enter the name of the BigQuery dataset to use when creating aggregate tables. You must create this dataset; AtScale does not create one automatically.

-

(Optional) Enable impersonation for the data warehouse by selecting the Enable to impersonate the client when connecting to data warehouses checkbox. Note that this requires you to have completed the steps described in Setting Up Impersonation for Google BigQuery.

When this option is enabled, the following options appear:

- Always use Canary Queries: Determines whether canary queries are required in the event of aggregate misses.

- Allow Partial Aggregate Usage: Enables mixed aggregate and raw data queries.

NoteAtScale cannot import data-loader bundles when impersonation is enabled. It is recommended that you import your desired data-loader bundles (commonly used for training) before enabling impersonation, or use a separate AtScale installation for training purposes.

-

In the Access Controls section, add the users and groups that you want to be able to access the data warehouse and its data. For more information on how data warehouse security works in AtScale, see About Data Warehouse Security.

-

Add a connection to the data warehouse. You must have at least one defined to finish adding the data warehouse.

-

In the Connections section, click Connection Required. The Add Connection panel opens.

-

Enter a Name for the connection.

-

(Optional) Under Project Id for query execution, enable the use dedicated project Id for query execution prioritization option, then enter the Project ID for the BigQuery project in which queries will be executed. This value is passed when creating a job. If not specified, AtScale uses the project associated with the service account key JSON file.

-

Upload the Service account key json file associated with your Google BigQuery Connection.

-

(Optional) Click Test Connection to test the connection.

-

Click Add.

The connection is added to the data warehouse. You can add more connections as needed.

-

-

(Optional) If your data warehouse has multiple connections, you can configure their query role mappings. This determines which types of queries are permitted per connection. A query role can be assigned to only one connection at a time.

In the Query Mapping section, select the connection you want to assign to each type of query:

- Small Interactive Queries: Small user queries. These are usually BI queries that can be optimized by AtScale aggregates or ones that involve low-cardinality dimensions.

- Large Interactive Queries: Large user queries. These are usually BI queries involving full scans of the raw fact table or ones that involve high-cardinality dimensions.

- Aggregate Build Queries: Queries made when building aggregate tables. This mapping should be assigned to a connection that has more compute power, and that is not subject to resource limitations that would prevent it from building aggregate tables.

- System Queries: AtScale system processing, such as running aggregate maintenance jobs and querying system statistics.

- Canary Queries: Only available when impersonation is enabled. Queries that run periodically to monitor system response times, workload, etc.

-

Click Save Data Warehouse.